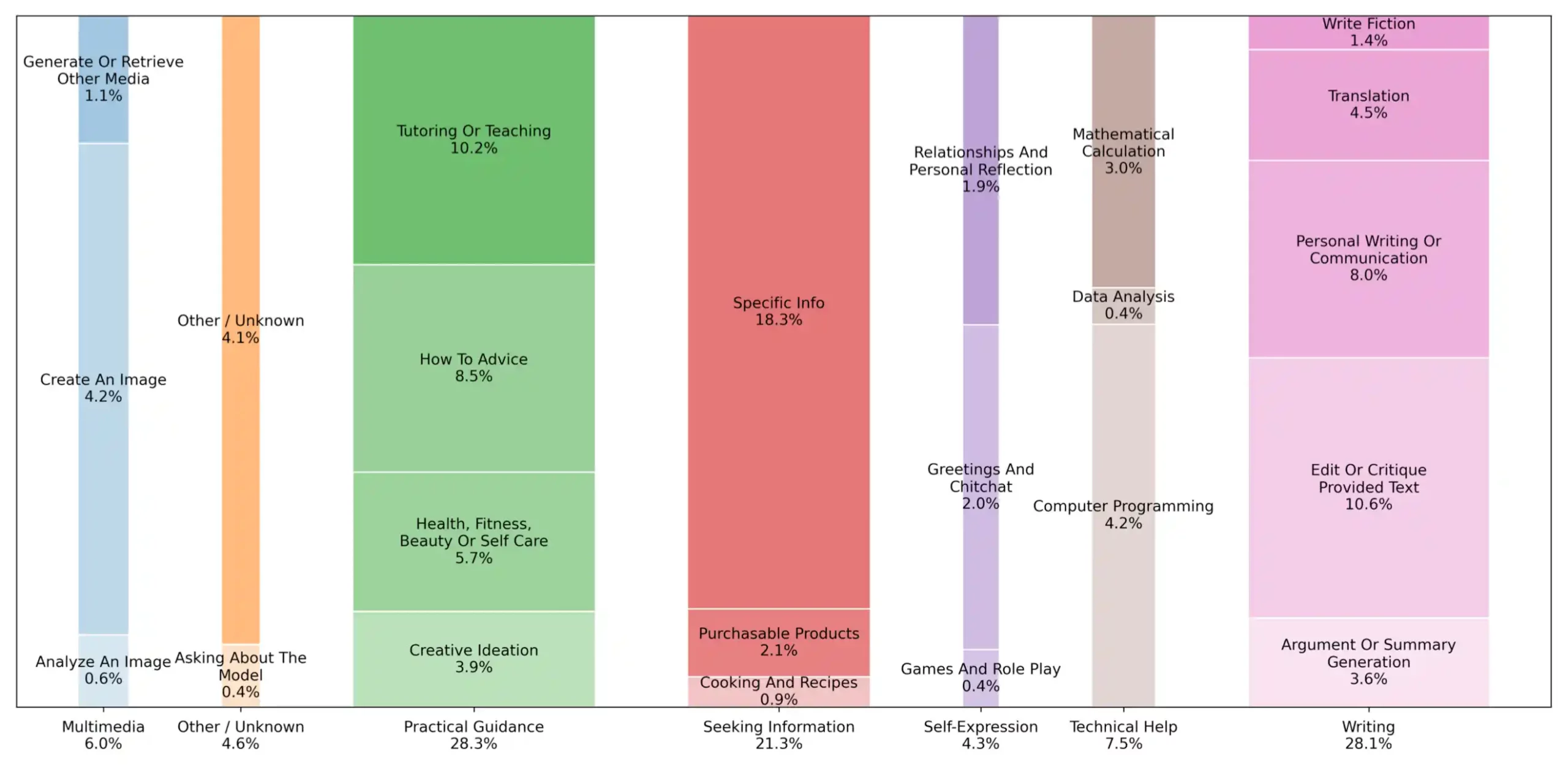

“While this means that some users don’t directly visit Wikipedia to get information, it is still among the most valuable datasets that these new formats of knowledge dissemination rely on. Almost all large language models (LLMs) train on Wikipedia datasets, and search engines and social media platforms prioritize its information to respond to questions from their users. That means that people are reading the knowledge created by Wikimedia volunteers all over the internet, even if they don’t visit wikipedia.org— this human-created knowledge has become even more important to the spread of reliable information online. And, in fact, Wikipedia continues to remain highly trusted and valued as a neutral, accurate source of information globally, as measured by large-scale surveys run regularly by the Wikimedia Foundation.

We welcome new ways for people to gain knowledge. However, LLMs, AI chatbots, search engines, and social platforms that use Wikipedia content must encourage more visitors to Wikipedia, so that the free knowledge that so many people and platforms depend on can continue to flow sustainably. With fewer visits to Wikipedia, fewer volunteers may grow and enrich the content, and fewer individual donors may support this work. Wikipedia is the only site of its scale with standards of verifiably, neutrality, and transparency powering information all over the internet, and it continues to be essential to people’s daily information needs in unseen ways. For people to trust information shared on the internet, platforms should make it clear where the information is sourced from and elevate opportunities to visit and participate in those sources.”

Source : New User Trends on Wikipedia – Diff