«We present a method to create universal, robust, targeted adversarial image patches in the real world. The patches are universal because they can be used to attack any scene, robust because they work under a wide variety of transformations, and targeted because they can cause a classifier to output any target class. These adversarial patches can be printed, added to any scene, photographed, and presented to image classifiers; even when the patches are small, they cause the classifiers to ignore the other items in the scene and report a chosen target class».

Étiquette : image processing (Page 3 of 4)

«Each of these images took about 18 days for the computers to generate, before reaching a point that the system found them believable»

Source : How an A.I. ‘Cat-and-Mouse Game’ Generates Believable Fake Photos – The New York Times

«Arsenal’s smart assistant AI suggests settings based on your subject and environment. It uses an advanced neural network to pick the optimal settings for any scene (using similar algorithms to those in self driving cars). Like any good assistant, it then lets you control the final shot. Here’s how it works…»

Source : Meet Arsenal, the Smart Camera Assistant | Features

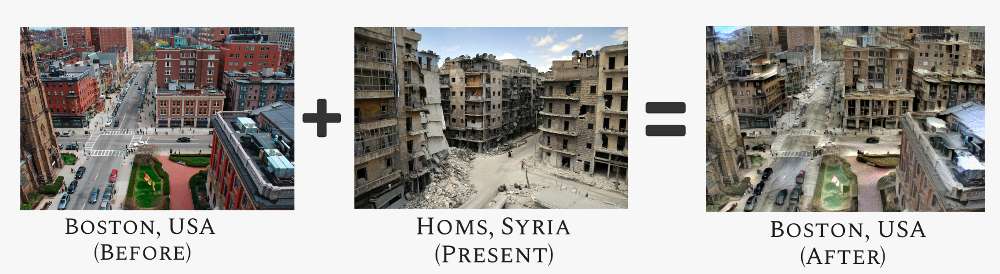

«Can we use AI to increase empathy for victims of far-away disasters by making our homes appear similar to the homes of victims?»

Source : Deep Empathy

«Unsupervised image-to-image translation aims at learning a joint distribution of images in different domains by using images from the marginal distributions in individual domains. Since there exists an infinite set of joint distributions that can arrive the given marginal distributions, one could infer nothing about the joint distribution from the marginal distributions without additional assumptions».

Source : Unsupervised Image-to-Image Translation Networks | Research

«Developed out of the Max Planck Institute for Intelligent Systems in Germany, researchers propose a new approach to traditional (and usually disappointing) single-image super-resolution (SISR) technology on the market».

Source : This new AI can make your low resolution photos great again

«Breakdown reel showcasing David Fincher’s invisible visual effects in the Netflix Original Series Mindhunter».

«Une autre expérimentation consiste à mesurer en temps réel le niveau d’attention des élèves en les filmant dans la classe avec deux caméras. Tous les professeurs perdent à un moment donné l’attention de leur auditoire. Certains s’en rendent compte et réagissent : ils modifient par exemple le tempo de leur voix ou mentionnent que le sujet sera au programme du prochain contrôle. D’autres ne prennent pas conscience qu’ils ont perdu l’attention des élèves, parce qu’ils regardent seulement ceux du premier rang, qui suivent bien. Et, donc, on peut envisager d’envoyer une alerte à ce professeur sur son smartphone pour l’informer qu’il n’a plus que 10 % d’étudiants attentifs à son cours».

Source : « Nous mesurons en temps réel le niveau d’attention des élèves »

«Yesterday at Adobe MAX, the company showed off a crazy new technology they’re working on called ‘Adobe Cloak.’ It’s like the Content Aware Fill feature in Photoshop, except that it can erase moving objects from video».

via dpreview.com.

«Everything the vehicle “sees” with its sensors, all of the images, mapping data, and audio material picked up by its cameras, needs to be processed by giant PCs in order for the vehicle to make split-second decisions. All this processing must be done with multiple levels of redundancy to ensure the highest level of safety. This is why so many self-driving operators prefer SUVs, minivans, and other large wheelbase vehicles: autonomous cars need enormous space in the trunk for their big “brains.”. But Nvidia claims to have shrunk down its GPU, making it an easier fit for production vehicles».

Source : Nvidia says its new supercomputer will enable the highest level of automated driving – The Verge