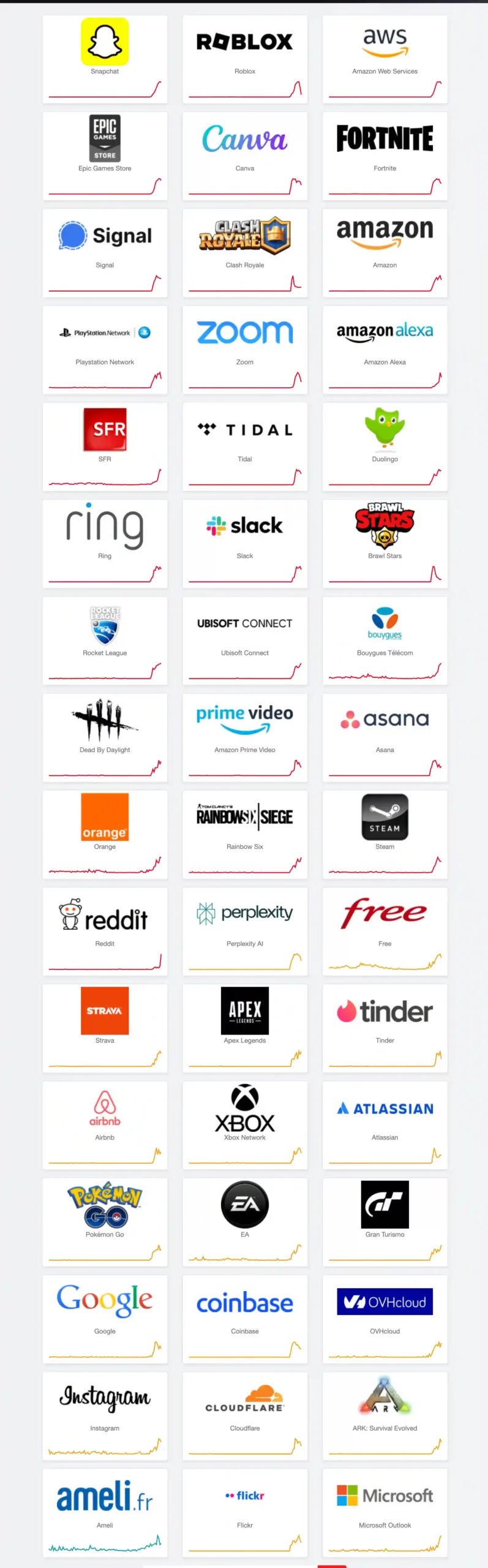

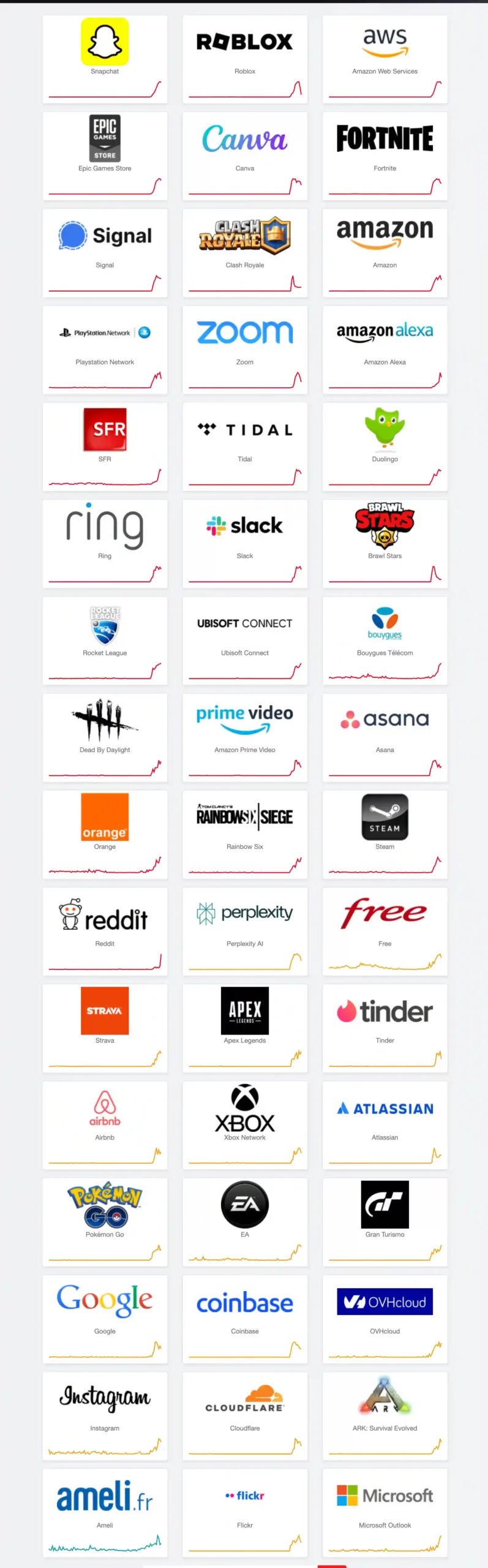

“Amazon Web Services (AWS) is currently experiencing a major outage that has taken down online services, including Amazon, Alexa, Snapchat, Fortnite, ChatGPT, Epic Games Store, Epic Online Services, and more. The AWS status checker is reporting that multiple services are “impacted” by operational issues, and that the company is “investigating increased error rates and latencies for multiple AWS services in the US-EAST-1 Region” — though outages are also impacting services in other regions globally.

Users on Reddit are reporting that the Alexa smart assistant is down and unable to respond to queries or complete requests, and in my own experience, I found that routines like pre-set alarms are not functioning. The AWS issue also appears to be impacting platforms running on its cloud network, including Perplexity, Airtable, Canva, and the McDonalds app. The cause of the outage hasn’t been confirmed, and it’s unclear when regular service will be restored.“

Perplexity is down right now,” Perplexity CEO Aravind Srinivas said on X. “The root cause is an AWS issue. We’re working on resolving it.””

Source : Major AWS outage takes down Fortnite, Alexa, Snapchat, and more | The Verge et Downdetector