“The Cerebras Wafer Scale Engine 46,225 mm2 with 1.2 Trillion transistors and 400,000 AI-optimized cores. By comparison, the largest Graphics Processing Unit is 815 mm2 and has 21.1 Billion transistors.”

Source : Home – Cerebras

“The Cerebras Wafer Scale Engine 46,225 mm2 with 1.2 Trillion transistors and 400,000 AI-optimized cores. By comparison, the largest Graphics Processing Unit is 815 mm2 and has 21.1 Billion transistors.”

Source : Home – Cerebras

“We present a method for detecting one very popular Photoshop manipulation — image warping applied to human faces — using a model trained entirely using fake images that were automatically generated by scripting Photoshop itself. We show that our model outperforms humans at the task of recognizing manipulated images, can predict the specific location of edits, and in some cases can be used to « undo » a manipulation to reconstruct the original, unedited image.”

Source : Detecting Photoshopped Faces by Scripting Photoshop

“Over the past few months, I have been collecting AI cheat sheets. From time to time I share them with friends and colleagues and recently I have been getting asked a lot, so I decided to organize and share the entire collection.”

Source : Cheat Sheets for AI, Neural Networks, Machine Learning, Deep Learning & Big Data

“After training our agents for an additional week, we played against MaNa, one of the world’s strongest StarCraft II players, and among the 10 strongest Protoss players. AlphaStar again won by 5 games to 0, demonstrating strong micro and macro-strategic skills. “I was impressed to see AlphaStar pull off advanced moves and different strategies across almost every game, using a very human style of gameplay I wouldn’t have expected,” he said. “I’ve realised how much my gameplay relies on forcing mistakes and being able to exploit human reactions, so this has put the game in a whole new light for me. We’re all excited to see what comes next.”

Source : AlphaStar: Mastering the Real-Time Strategy Game StarCraft II | DeepMind

“We propose an alternative generator architecture for generative adversarial networks, borrowing from style transfer literature. The new architecture leads to an automatically learned, unsupervised separation of high-level attributes (e.g., pose and identity when trained on human faces) and stochastic variation in the generated images (e.g., freckles, hair), and it enables intuitive, scale-specific control of the synthesis. The new generator improves the state-of-the-art in terms of traditional distribution quality metrics, leads to demonstrably better interpolation properties, and also better disentangles the latent factors of variation. To quantify interpolation quality and disentanglement, we propose two new, automated methods that are applicable to any generator architecture. Finally, we introduce a new, highly varied and high-quality dataset of human faces.”

via Tero Karras FI (YouTube)

“Modified implementation of DCGAN focused on generative art. Includes pre-trained models for landscapes, nude-portraits, and others”.

Source : GitHub – robbiebarrat/art-DCGAN

“The members of Obvious don’t deny that they borrowed substantially from Barrat’s code, but until recently, they didn’t publicize that fact either. This has created unease for some members of the AI art community, which is open and collaborative and taking its first steps into mainstream attention. Seeing an AI portrait on sale at Christie’s is a milestone that elevates the entire community, but has this event been hijacked by outsiders?”

Source : How three French students used borrowed code to put the first AI portrait in Christie’s – The Verge

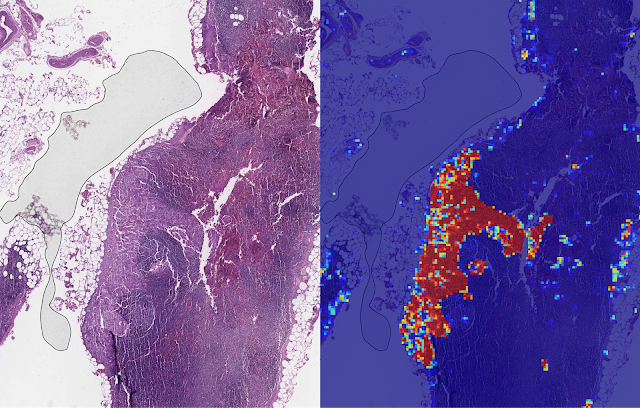

“In both datasets, LYNA was able to correctly distinguish a slide with metastatic cancer from a slide without cancer 99% of the time. Further, LYNA was able to accurately pinpoint the location of both cancers and other suspicious regions within each slide, some of which were too small to be consistently detected by pathologists. As such, we reasoned that one potential benefit of LYNA could be to highlight these areas of concern for pathologists to review and determine the final diagnosis.”

Source : Google AI Blog: Applying Deep Learning to Metastatic Breast Cancer Detection

“In a world where surveillance technology is being deployed everywhere from airports and stadiums to public schools and hotels and raising a plethora of privacy concerns, it’s perhaps inevitable that farms on land and at sea would find ways to exploit it to improve productivity. Just this year, American agribusiness giant Cargill Inc. said it was working with an Irish tech start-up on a facial-recognition system to monitor cows so farmers can adjust feeding regimens to enhance milk production. Scanners will allow them to track food and water intake and even detect when females are having fertile days. Salmon farming may be next in line. As fish vies with beef and chicken as the global protein food of choice, exporters like Norway, the world’s biggest producer of the pinkish-orange fish, have become the focal point for radical marine-farming methods designed to help the $232 billion aquaculture industry feed the world.”

Source : Salmon Farmers Are Scanning Fish Faces to Fight Killer Lice – Bloomberg

“Piano Genie is in some ways reminiscent of video games such as Rock Band and Guitar Hero that are accessible to novice musicians, with the crucial difference that users can freely improvise on Piano Genie rather than re-enacting songs from a fixed repertoire. You can try it out yourself via our interactive web demo!”

© 2026 no-Flux

Theme by Anders Noren — Up ↑